How to use predictive cohort modeling to drive smarter campaign decisions

If there’s one metric that consistently stirs debate among marketers, it’s LTV. Some ignore it entirely, calling it too abstract to help with today’s problems, after all, who cares what a user might bring in five years if you’ve got to show ROI next quarter? Others cling to it blindly, using it to justify budgets and campaign bets. Then there’s the skeptical middle: those who see its potential, but have learned not to trust the LTV number in their dashboards because they’ve watched it swing wildly over time.

To be blunt, each of these approaches — ignoring LTV, trusting it blindly, or circling it with doubt — leads to trouble. If LTV is just a static number pulled from a dashboard once a quarter, it’s useless. But if you’re tracking how it evolves across cohorts in near real-time, it becomes a tool to understand where your budget is actually working and where your growth is hiding.

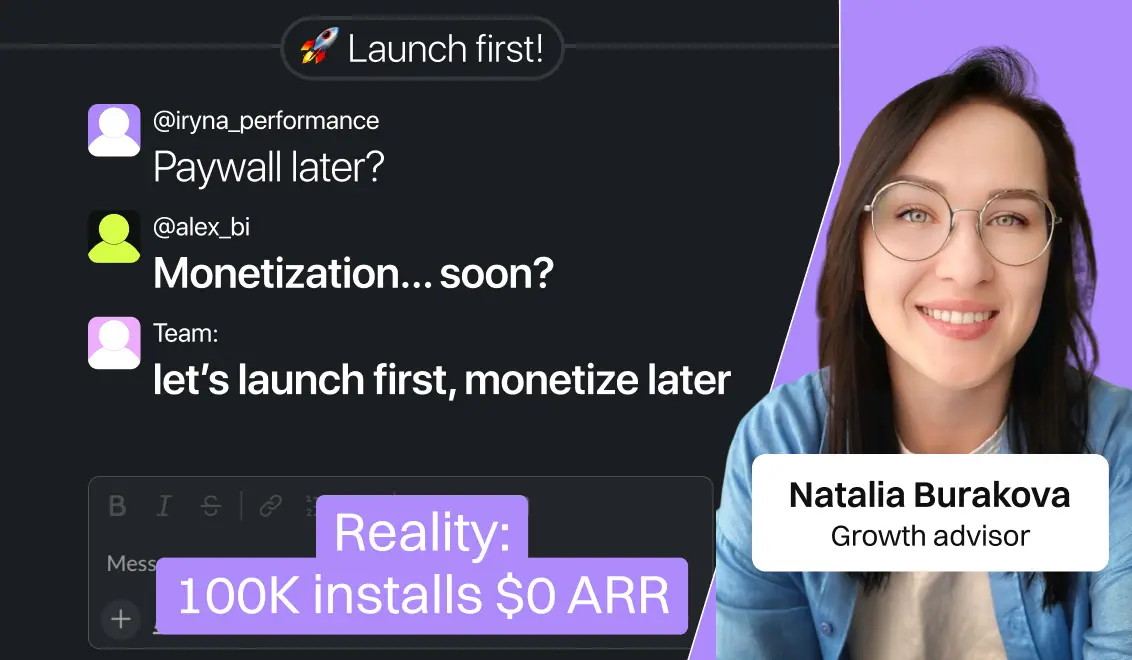

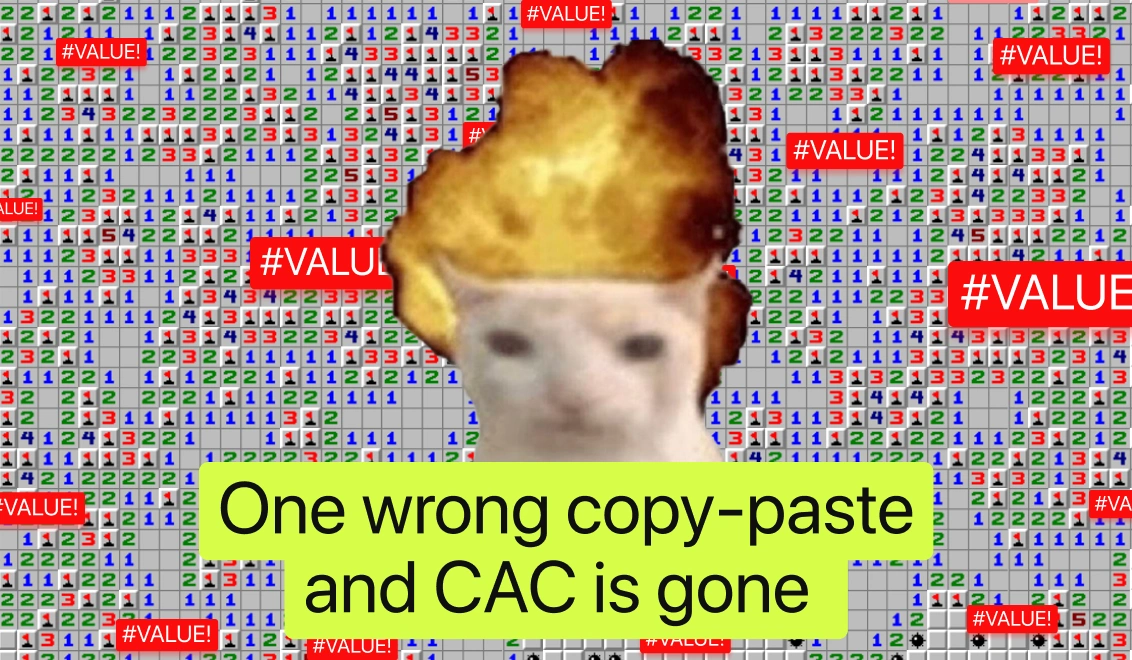

A typical scenario plays out like this: a team cobbles together an LTV model using ARPU, churn, retention data from App Store Connect and runs forecasts in Excel or dashboards. But when it comes down to it, they’re left scratching their heads: “Okay… we have predictions, but how do we actually use this to steer campaign spend?” This exact pain point comes up frequently in data‑science and app developer groups: LTV models exist, but barely ever influence budget or creative decisions.

These recurring cases highlight a core issue: prediction without actionable guidance is just noise, and that’s part of why so many teams end up either ignoring LTV altogether or over‑relying on it without real confidence.

Meanwhile, with cohort-based forecasting and the ability to act on it, the whole game shifts in your favor. A 2025 guide by AppSamurai recommends using predictive LTV models built on early indicators like Day‑7 retention and first-week engagement to identify high-value acquisition sources before full lifetime data becomes available.

Here we go. LTV becomes meaningful only when it's tracked dynamically when you treat it as a signal that evolves with user behavior instead of something you check at quarter's end. And that’s what predictive cohort modeling makes possible: spotting value in motion.

In the next sections, we’ll walk through how predictive cohort modeling actually shifts the playbook from reacting to lagging indicators to anticipating value while it’s still forming.

What teams unlock with cohort analysis predictions

You’ve probably seen how traditional LTV often feels like a post-mortem. A campaign runs, retention sags, monetization is shaky, and by the time your dashboard catches up, it’s too late to change the outcome.

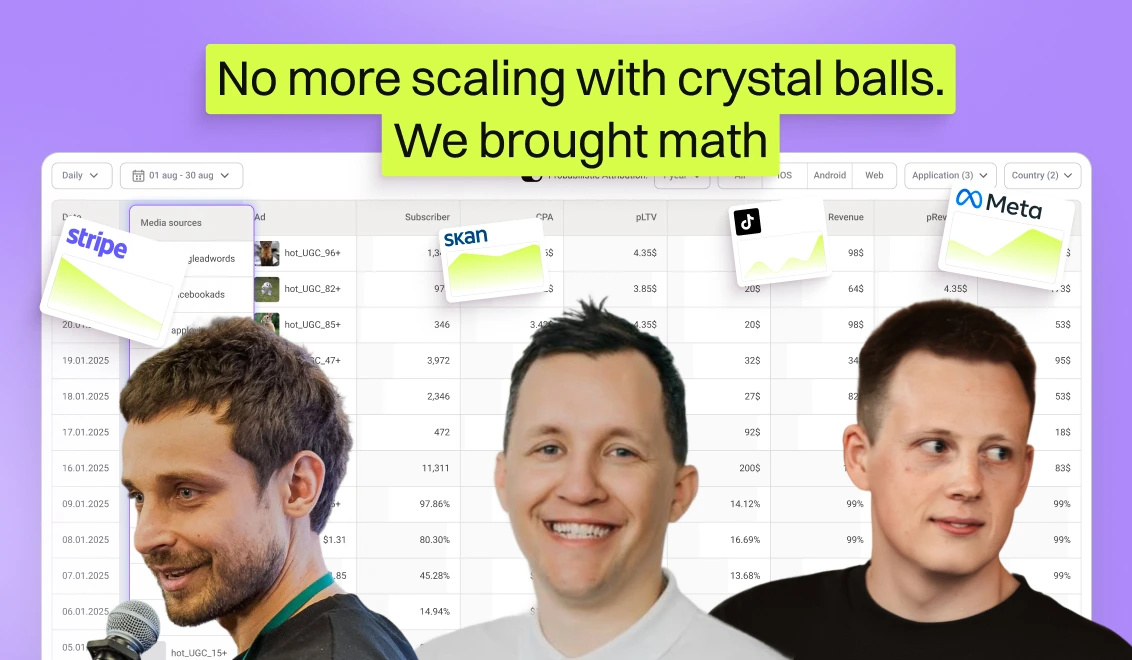

Cohort predictive analytics flips that script. Instead of averaging value across all users or waiting 30 days for numbers to settle, it looks at how users behave and uses that to predict where your budget will pay off. And Campaignswell’s model takes it further. By training on behavior patterns from thousands of similar users across apps, it can generate reliable predictions even when your own historical data is still thin. So you’re working with signal from the early days of a test.

It works like this: users are grouped into cohorts based on shared traits, like pricing variant, acquisition source, or first-session behavior. But what makes it powerful is what you do next. You analyze the shape of their journey: how deep they go into onboarding, how often they return in the first week, which features they interact with early. These are behavior patterns that often mirror high or low LTV profiles.

Say you launch two onboarding flows. Users from flow A complete setup quickly but rarely return. Flow B takes longer but creates cohorts that explore more features and stick around. Predictive modeling will flag flow B as the better long-term bet, well before raw revenue catches up.

Teams that get this right don’t just react to lagging metrics. They use behavioral fingerprints to prioritize creative, allocate budget, and forecast LTV at a cohort level so growth decisions aren’t based on guesses or averages, but on how value actually builds over time.

And that’s where things really start to scale. But what does that look like in practice?

Cohort predictions from user behavior in practice

Here’s a typical story: a team launches new creatives across multiple channels. They get excited: installs are flowing in. But instead of waiting weeks for revenue data to trickle in, they plug early Day-2 engagement into their predictive model. One cohort, users from a Pinterest ad with a 14-second loop, starts showing the same early behavior patterns as their most profitable users from a previous campaign.

The model flags it as a high-value cohort. Within 48 hours, they double the spend on that channel and kill two other underperforming creatives. By the time Day-30 revenue shows up, the decision already paid off.

Another team sees the reverse. A channel is delivering cheap installs, but their cohort dashboard shows a steep drop-off in key engagement metrics by Day 3. The model projects low LTV. Instead of riding out the week, they pull the plug and reinvest that budget into a stronger channel, saving tens of thousands.

These teams aren’t waiting for LTV to show up on a report. They’re using amplitude predictive cohorts as an early, evolving signal, one that helps them move faster, scale smarter, and catch problems before they turn into expensive post-mortems.

Here are two quick real-world examples that show the kind of results teams can achieve when they approach LTV this way. In both cases, they used Campaignswell, a tool built specifically for predictive cohort modeling. With cohort predictive analytics, it helps teams go beyond static dashboards by tracking early user behavior, forecasting long-term value, and surfacing where to scale or stop spending before the budget’s gone. And here’s how it plays out in practice:

- VPN app team: synced up all data streams, trusted the model, and got iOS ROI from 7% to 50%, halved payback time, and scaled Android spend 3x without losing margin.

- AI companion app: launched a new funnel, used predictive LTV to pick the winners early, and ended up with a setup that now brings in 20% of the company’s total revenue.

Why predictive cohorts beat A/B tests when time matters

Running A/B tests takes time, and when you're managing multiple creatives, channels, or geos, that delay costs money. Predictive cohort modeling flips that by giving early directional insight, usually in days, not weeks.

Airbridge, featured at the “Global Game Growth 2025” event, advocates predictive Lifetime Value (pLTV) over reliance on Day-7 or Day-30 retention because payback periods in mobile are stretching longer. With pLTV, teams can estimate value across 90–180 days quickly, making smarter scaling decisions faster.

With A/B testing, results often take weeks, and you might run out of time before you scale intelligently. Predictive cohorts, on the other hand, guide decisions within 48–72 hours:

- Predictive models flag promising creatives early so marketers can scale winners fast

- Underperforming variants get paused before they drain budget

- Spend shifts are informed by projected long-term value, not just short-term click metrics

Recent research like the RL‑LLM‑AB framework shows AI-enhanced A/B testing can automate variant selection and adapt in real-time, but still requires learning time and significant traffic to perform well. In contrast, pure predictive modeling distills early signals into forecasted value without waiting for full consumer response, meaning faster ROI insight.

By converting early behavioral signals into predictive insights, cohort-based modeling empowers UA teams to make faster, smarter decisions and avoid wasting budget on trials that were never heading toward real value.

Key use cases where user-behavior–based cohort predictions move the needle

.png)

Here’s what makes these predictions possible

By now, one thing’s clear: predictive LTV modeling changes the game, but only if you can act on it. It’s not about having smarter forecasts sitting in a slide deck. It’s about making better decisions while they still matter.

That’s where Campaignswell proves its worth.

Everything we’ve talked about — early signals, cohort segmentation, shifting spend mid-flight, catching creative fatigue before ROAS drops — is baked into how Campaignswell works. It was built not just to model behavior, but to turn insight into action.

You get a focused view into how your cohorts are behaving, what’s actually driving value, and where shifting your budget will make the biggest difference, while there’s still time to act.

We’ve seen teams use it to:

- cut weeks off their decision cycles,

- save six figures by spotting underperforming cohorts early,

- and scale campaigns with full confidence that the value is actually there.

Because at the end of the day, predicting LTV is only useful if it changes what you do next. And with Campaignswell, it does.

Ready to see where your next six months of growth could come from?

Book your demo!

Co-founder & CEO at Campaignswell