App install campaigns: what actually works in 2025

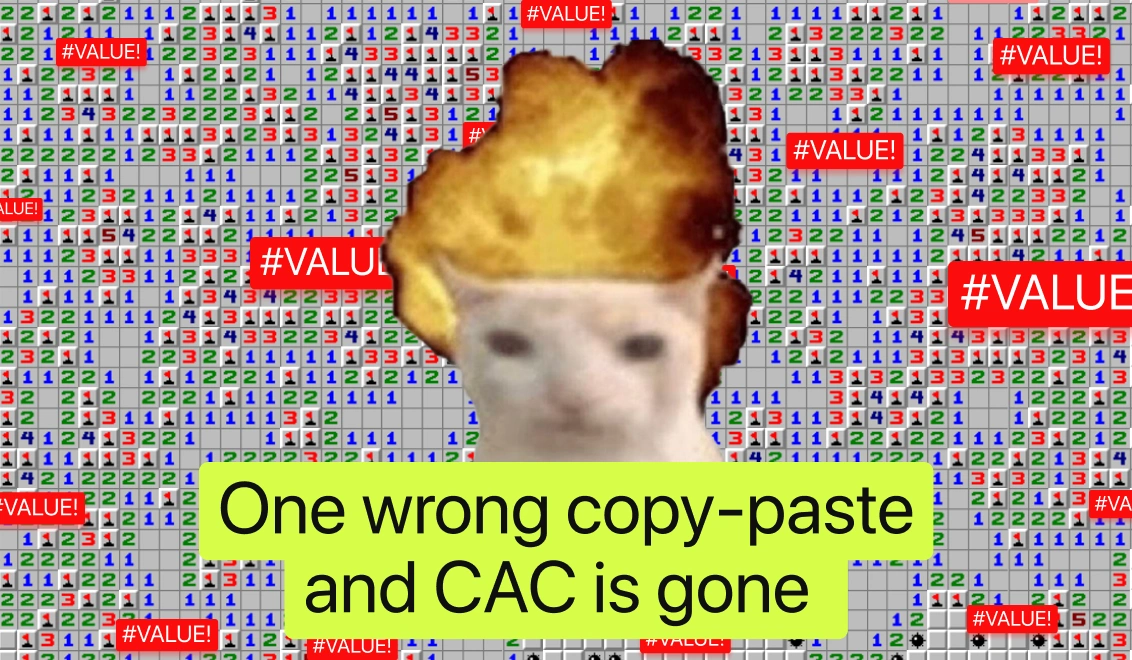

Imagine this: you launch a promising app install campaign on TikTok. CTR looks solid, installs start flowing in, and your team’s Slack lights up with early optimism. Fast forward 48 hours retention drops off a cliff, trial conversions are nowhere, and your CAC has quietly doubled. Sounds familiar?

Welcome to app install campaigns in 2025. The space is louder, more expensive, and brutally less forgiving. Privacy walls keep rising, SKAN still clouds your iOS truth, and every platform wants more data than you’re comfortable handing over. Meanwhile, the pressure to hit ROAS targets isn’t going away. You’re expected to be fast, smart, and profitable at the same time.

And while some teams are still cobbling together dashboards like it’s 2018, others are scaling aggressively by changing how they think about creative, platforms, bidding, and attribution. This guide is for the latter.

Let’s unpack what UA teams are really doing in 2025, so you can skip the faceplants and quietly clean up.

.png)

Rethink the funnel: pre-click strategy is the power move in app installs

“Click-to-install” is a shallow metric. In 2025, top teams obsess over pre-click intent. One health app team tested three creatives: one told a user transformation story, another showed product features, and the third listed generic benefits. The story-based version didn’t drive higher CTR, but its users were 2x more likely to return after 7 days. Same clicks, way better cohort.

They learned the hard way that pre-qualifying users with storytelling outperformed hyped-up product shots. Instead of over-optimizing for impressions, they focused on filtering in motivated users, those with a clear “why” before tapping install.

If this team had been running predictive LTV analysis per creative, like Campaignswell enables, they could have reached this conclusion in half the time. Campaignswell gives you cohort-level retention by creative and predicts LTV trends on day 1, not day 30.

Another example came from a parenting app. They launched ads with aggressive discount messaging: great CPI, terrible day-1 engagement. When they switched to content that addressed sleep-deprived parents with empathy, not promos, installs dropped slightly, but retention tripled. Again, a classic case of shallow click intent vs meaningful install intent.

.png)

Why platform fit beats platform scale

In 2025, you win by syncing your creative to where users already have their guard down and curiosity up. One dating app team balanced:

- Meta for high-income, 30+ users with long-term intent

- TikTok for fast-paced creative discovery and volume

- Reddit for niche affinity groups like gamers or outdoor enthusiasts

They saw lower CPIs on TikTok but found that those users churned the fastest. Meta had fewer installs but a 60% higher LTV. Reddit had a small volume but top-tier retention from engaged community members.

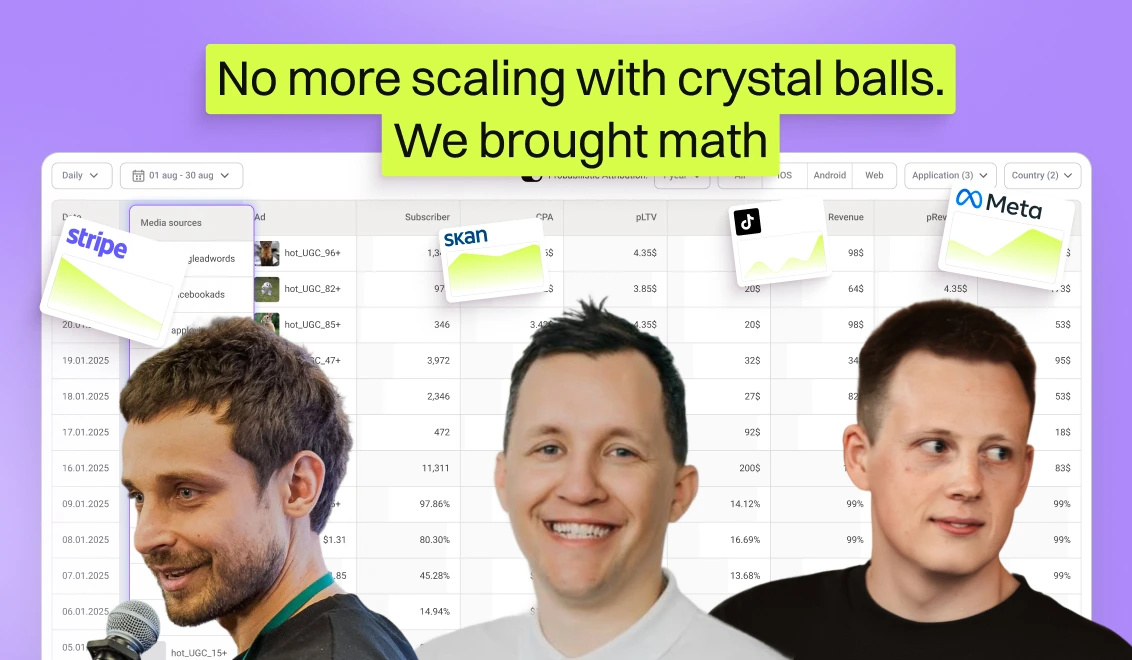

The missing link? They struggled to bring these signals together into one source of truth. With Campaignswell, they could have unified all channel-level spend and performance data, including LTV and trial conversion, into one dashboard, comparing efficiency per platform without delay.

Another growth marketer managing a language learning app said they shifted budget away from YouTube after seeing long view times but nearly zero trial starts. In contrast, Snap delivered higher trial conversions despite worse engagement metrics. So, intent lives in user behavior, not just view time.

This pattern reflects a broader shift in how UA teams are thinking. Liftoff’s 2025 App Marketer Survey shows that apps continue to spend heavily on major networks like TikTok, Meta, and Google Ads. Yet, the real shift is in how spend is weighed. About 60% of marketers now name ROAS as their top goal, signaling a move away from chasing install volume toward funding channels that drive retention and value over time.

Creative testing in app install campaigns: why feedback speed beats volume

Creative shelf life has shrunk to days, not weeks. Teams that wait on post-mortem dashboards lose precious ground. One fintech marketer shared they cycle creatives in and out every 3–4 days. Their cadence:

- Monday: new hooks based on last week’s insights

- Wednesday: A/B variants on performance leaders

- Friday: pause underperformers based on day-3 metrics

About 80% of ads don’t make it past one week. CTR isn’t enough, they use early engagement and behavioral indicators to call winners.

But here’s the gap: they still rely on exports and spreadsheets to connect ads to in-app retention. Campaignswell would let them automatically map ad IDs to downstream user behavior and project 7-day revenue from day-1 signals, slashing testing time in half.

One gaming studio tests 12–15 creatives per week. They look at ROAS at day 7, but their tests don’t scale fast enough because they lack predictive creative scoring. That’s exactly where a tool like Campaignswell could flag probable losers early using machine learning-trained creative signals.

Liftoff’s Mobile Ad Creative Index (2025) confirms that AI plays a central role in creative production, powering faster iteration, testing, and interactive formats that outperform static ads. Top-performing brands are combining AI tools, emotion-driven storytelling, and UGC formats to double down on what users actually respond to (1.1 million creatives analyzed). AppsFlyer’s 2025 report shows high-performing brands produce thousands of creative variations each quarter, emphasizing variety and emotional storytelling over fewer polished versions.

.png)

Value-based bidding or bust

In 2025, bidding for installs is outdated. The winners have shifted to value-based strategies: feeding Meta and Google clean conversion data, targeting tROAS or VO campaigns.

A subscription fitness app saw their CAC drop from $4.80 to $3.65 in just over a week when they switched from installs to purchase events, connecting App Store revenue to ad platforms. The key: surfacing actual user value, not engagement proxies.

Another app using in-app purchases for monetization failed to hit target ROAS until they stopped optimizing for trial starts and instead used in-app purchase revenue to feed into Google’s event tracking.

Liftoff’s report confirms that 74% of marketers cite ROAS as their most important KPI in 2025, and most are aligning bidding strategies around that north star.

At Campaignswell, we’re totally on board with the shift toward value-based metrics; it’s long overdue. But we’d argue it’s time to level up. If you’re serious about sustainable growth, the metric you really want to build around is LTV. Because LTV tells you who your best users are, what they’re worth over time, and how to find more of them. This belief is backed by what we’re seeing on the ground. One client increased campaign scale to over $600,000 per day by optimizing based on predictive LTV, getting a signal on which creatives and channels would drive long-term value.

Another team used Campaignswell to launch and optimize a new web-to-app funnel. By identifying high-LTV funnel paths early, before major spend, they scaled with precision. Within six months, that funnel accounted for 20% of their total company revenue.

Attribution isn’t broken, it’s just more honest now

SKAN changed the game, and attribution isn’t what it used to be. But smart UA teams aren’t trying to “fix” it, they’re adjusting how they interpret.

One e-learning app saw fuzzy attribution from TikTok, SKAN only told them installs came from “campaign X.” They started pairing that with revenue cohort behavior, not trying to chase click-level granularity.

After 3 weeks of trend-watching, they noticed trial-to-subscribe conversion was 1.6x higher on users from TikTok, even if the volume was lower. So they moved 30% of budget from Meta to TikTok.

That’s exactly the kind of directional insight Campaignswell excels at: predictive models show probable LTV by source, so even without perfect attribution, teams can act on revenue patterns.

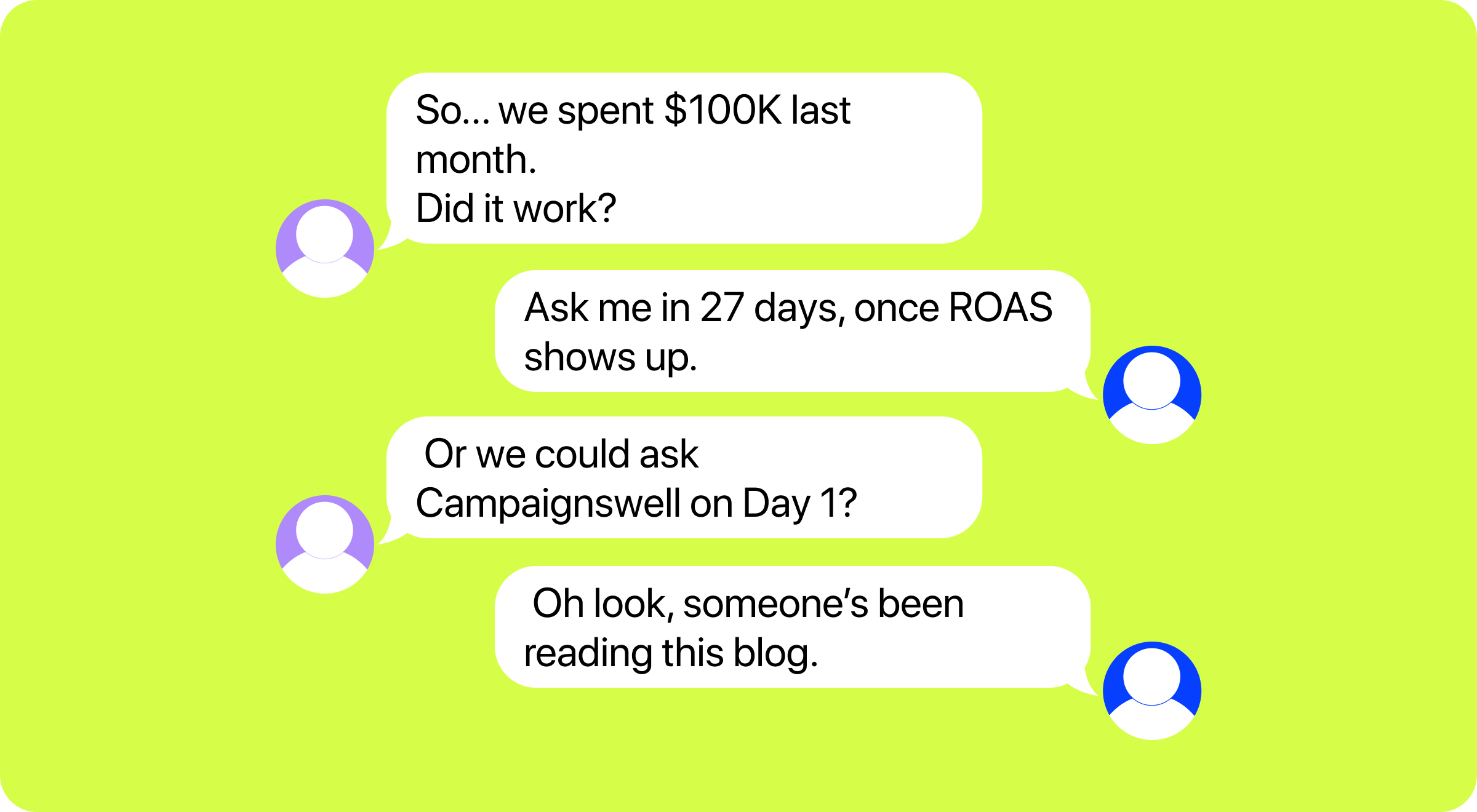

Another example: a lifestyle app running VO campaigns couldn’t tell if a new creative had worked until 21 days later when ROAS finally showed up. Campaignswell would have flagged the creative’s decline in predicted LTV on day 3, letting them swap sooner.

According to Liftoff, 67% of marketers report some familiarity with Apple’s AdAttributionKit, and 31% are running re-engagement campaigns on iOS, despite ongoing challenges with SKAN transparency.

The metrics that actually drive decisions in 2025

CPI means less and less. These metrics are the new north stars:

- Predicted LTV by creative, not campaign

- CAC payback period (ideally under 14 days for subscription apps)

- ROAS trendlines by cohort

- Funnel velocity (time from click to conversion)

This shift in metrics matters because it unlocks real outcomes. One team behind an AI companion app applied this thinking using Campaignswell. Instead of chasing CTR or early install numbers, they focused on predicted LTV by creative in the first few days. That let them identify which funnel paths actually converted into revenue, and double down early. Within six months, that single web-to-app funnel was driving 20% of their entire company revenue.

The challenge is making this level of precision scalable. Testing and ranking creatives by LTV or funnel velocity sounds great until you’re buried in manual exports. That’s exactly where tools like Campaignswell come in. They automate creative scoring based on pLTV, ROAS trends, and retention patterns, so teams can spend less time juggling dashboards and more time making sharp decisions early.

Mistakes to dodge if you want to win in 2025

The same traps keep showing up:

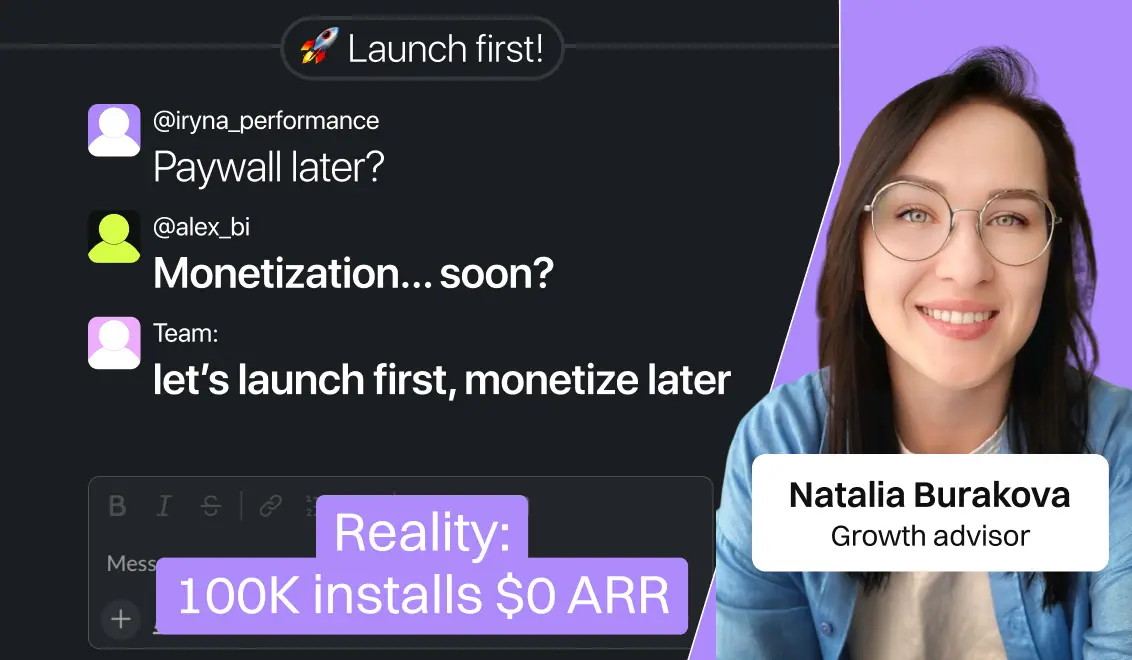

- Scaling winners before validating LTV or ROAS with real data

- Treating platform ROAS like gospel

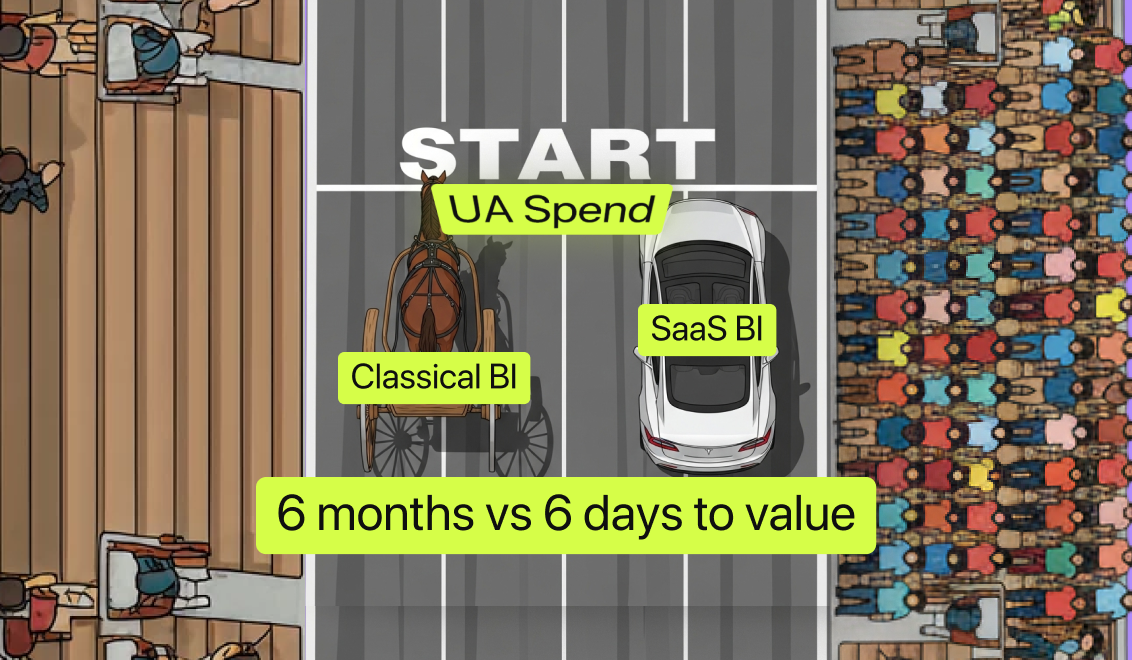

- Delegating UA data to BI teams and losing agility

- Underestimating the organic lift from paid bursts

- Waiting until day 30 to declare creative winners

Most of these come down to delayed feedback and broken feedback loops. With the right tooling, these aren’t hard to fix.

What you can do next

If you’re running UA campaigns with more questions than answers, here’s where to start:

- Audit your creative testing workflow: are you waiting too long to kill underperformers?

- Check your attribution: do you trust your sources, or are you still guessing?

- Compare ROAS and LTV across platforms, not just CPI.

- Talk to your product and finance teams, align on CAC and payback goals.

- And if your dashboards are still giving you weekly reports instead of daily decisions, it might be time to rethink your stack.

2025 rewards the teams that stay sharp and move fast. The ones who filter noise early, act on the right signals, and know which metrics are worth obsessing over. They read the signal early, adapt fast, and make decisions like pros who know what levers actually move revenue.

If your team is serious about growing profitably, you don’t need 10 tools. You need the right signal, delivered in time to act.

That’s where tools like Campaignswell come in: unifying the data, surfacing the signal, and helping you scale with confidence.

Curious how this could look for your team?

Let’s jump on a quick demo and walk through it together.

Co-founder & CEO at Campaignswell